示例代码:

1 | #!/usr/bin/env python |

执行结果:

1 | 判别器损失: 0.8938-->(判别真实的: 0.4657 + 判别生成的: 0.4281)... |

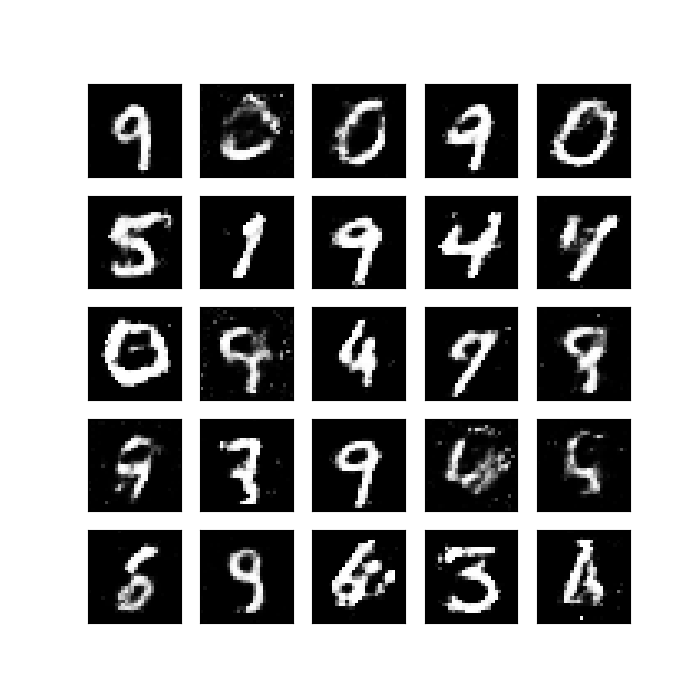

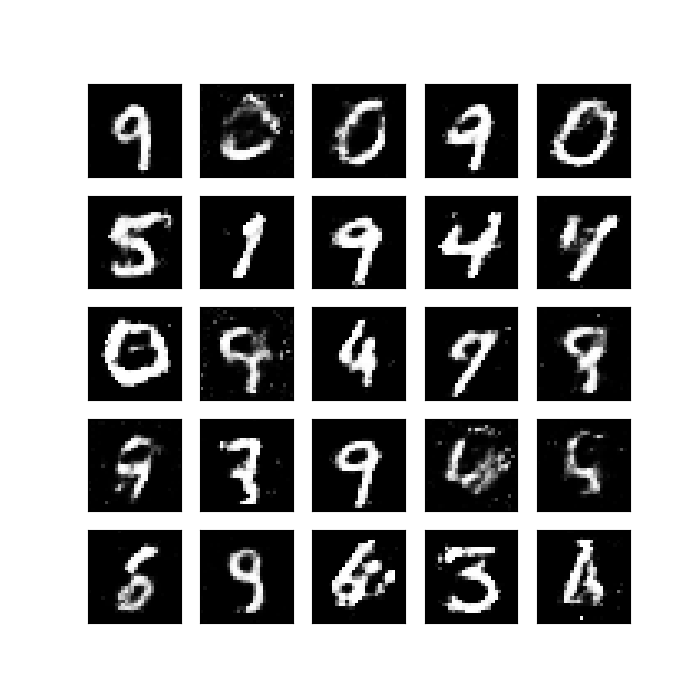

生成的图像:

转载请注明:Seven的博客

1 | #!/usr/bin/env python |

1 | 判别器损失: 0.8938-->(判别真实的: 0.4657 + 判别生成的: 0.4281)... |

转载请注明:Seven的博客

本文标题:TensorFlow实现简单的生成对抗网络-GAN

文章作者:Seven

发布时间:2018年09月03日 - 00:00:00

最后更新:2018年12月11日 - 22:11:30

原始链接:http://yoursite.com/2018/09/03/2018-09-03-TensorFlow-GAN/

许可协议: 署名-非商业性使用-禁止演绎 4.0 国际 转载请保留原文链接及作者。

微信支付

支付宝