AlexNet背景

AlexNet是在2012年被发表的一个经典之作,并在当年取得了ImageNet最好成绩,也是在那年之后,更多的更深的神经网路被提出,比如优秀的vgg,GoogleLeNet.

其官方提供的数据模型,准确率达到57.1%,top 1-5 达到80.2%. 这项对于传统的机器学习分类算法而言,已经相当的出色。

框架介绍:

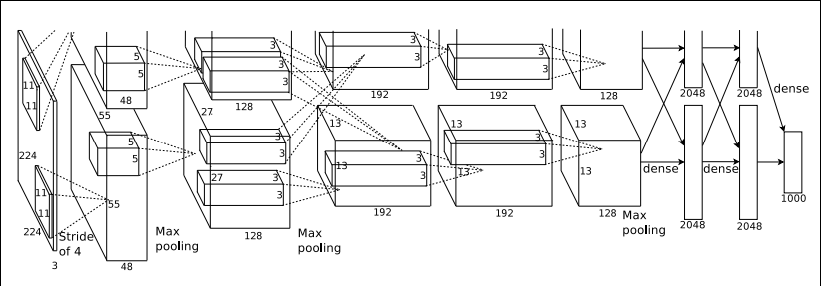

AlexNet的结构模型如下:

示例代码:

AlexNet实现Minist手写数字识别

1 | #!/usr/bin/env python |

转载请注明:Seven的博客