LeNet5

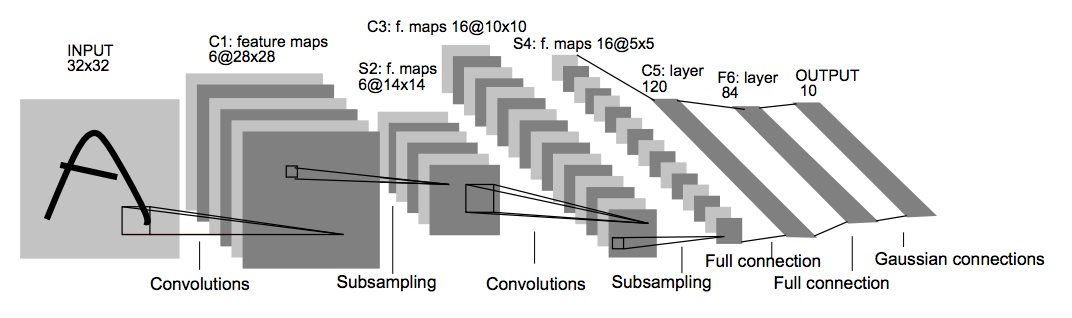

LeNet5 诞生于 1994 年,是最早的卷积神经网络之一,并且推动了深度学习领域的发展。自从 1988 年开始,在许多次成功的迭代后,这项由 Yann LeCun 完成的开拓性成果被命名为 LeNet5(参见:Gradient-Based Learning Applied to Document Recognition)。

LeNet5 的架构基于这样的观点:图像的特征分布在整张图像上,以及带有可学习参数的卷积是一种用少量参数在多个位置上提取相似特征的有效方式。

在那时候,没有 GPU 帮助训练,甚至 CPU 的速度也很慢。因此,能够保存参数以及计算过程是一个关键进展。这和将每个像素用作一个大型多层神经网络的单独输入相反。

LeNet5 阐述了那些像素不应该被使用在第一层,因为图像具有很强的空间相关性,而使用图像中独立的像素作为不同的输入特征则利用不到这些相关性。

LeNet5特点

LeNet5特征能够总结为如下几点:

1)卷积神经网络使用三个层作为一个系列: 卷积,池化,非线性

2) 使用卷积提取空间特征

3)使用映射到空间均值下采样(subsample)

4)双曲线(tanh)或S型(sigmoid)形式的非线性

5)多层神经网络(MLP)作为最后的分类器

6)层与层之间的稀疏连接矩阵避免大的计算成本

LeNet5实现

1 | #!/usr/bin/env python |

执行结果

1 | FUNCTION READY!! |

转载请注明:Seven的博客